How to run a BigQuery SQL using Python?

Contents

BigQuery API Client libraries

BigQuery API client libraries are used to run a BigQuery job programmatically. It calls the BigQuery api’s internally. Google Cloud offering BigQuery client libraries for different programming languages. In this tutorial, we are going to use the BigQuery Python client library.

Prerequisite to access BigQuery using Python

- Service account – In order to make a request to BigQuery API, we need to use a Service account. It belongs to our GCP project and it is used by the BigQuery Python client library to make a BigQuery API request.

- Service account key – To authenticate the service account , we need a service account private key JSON file. It can be used to obtain credentials for a service account. BigQuery verify the client identity using this key.

- roles – BigQuery has a number of predefined roles (user, dataOwner, dataViewer etc.) that we can assign to our Service account. Using that, we can control the access of our BigQuery resources(dataset, tables, etc)

- BigQuery Python client library – Install the BigQuery client library using pip command as below

pip install --upgrade google-cloud-bigquerySteps to run a BigQuery SQL using Python

Once we set up all the requirements, we can start writing the Python program to run BigQuery SQL. Let’s write the program step by step

Step 1: Import BigQuery and service account library

First we need to import bigquery and service_account libraries in the program

from google.cloud import bigquery

from google.oauth2 import service_accountStep 2: Get credentials for service account

Let’s use service_account.Credentials.from_service_account_file and obtain credentials for service account. Here we are passing the path of service account key file.

credentials = service_account. \

Credentials. \

from_service_account_file('/Users/rc_user_1/GCP/my-rcs-project-833123-ef45632b1b12.json')Step 3: Construct bigquery client

Using project id and credentials, we need to create a bigquery client as below. Here we set our project id as my-rcs-project.

project_id = 'my-rcs-project'

client = bigquery.Client(credentials=credentials, project=project_id)Step 4: Run the query

Now we can run the BigQuery using authenticated BigQuery client

query_job = client.query("""

SELECT *

FROM rc_fin_test_tables.test_py_table

LIMIT 1000 """)

results = query_job.result() # Waits for job to complete.Step 5: Display the query result

print("Total rows fetched: ", results.total_rows)

for row in results:

print(row)Example

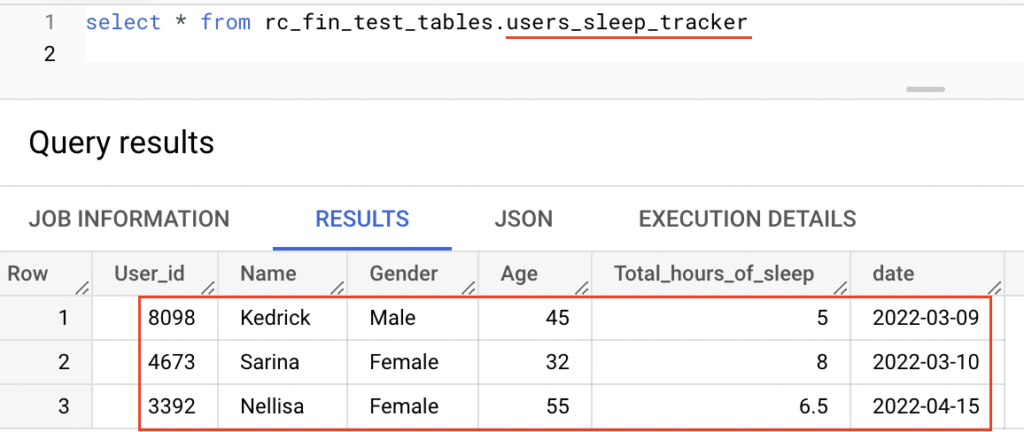

Consider that we have a table users_sleep_tracker in BigQuery dataset rc_fin_test_tables. It contains the records as below.

Read from BigQuery table

The below code fetch the records from BigQuery table users_sleep_tracker using Python.

from google.cloud import bigquery

from google.oauth2 import service_account

credentials = service_account. \

Credentials. \

from_service_account_file('/Users/rc_user_1/GCP/my-rcs-project-833123-ef45632b1b12.json')

project_id = 'my-rcs-project'

client = bigquery.Client(credentials=credentials, project=project_id)

query_job = client.query("""

SELECT *

FROM rc_fin_test_tables.users_sleep_tracker """)

results = query_job.result() # Waits for job to complete.

print("Total rows fetched: ", results.total_rows)

for row in results:

print(row)Output

Total rows fetched: 4

Row((7431, 'Victor', 'Male', 77, Decimal('9'), datetime.date(2022, 4, 25)), {'User_id': 0, 'Name': 1, 'Gender': 2, 'Age': 3, 'Total_hours_of_sleep': 4, 'date': 5})

Row((4673, 'Sarina', 'Female', 32, Decimal('8'), datetime.date(2022, 3, 10)), {'User_id': 0, 'Name': 1, 'Gender': 2, 'Age': 3, 'Total_hours_of_sleep': 4, 'date': 5})

Row((3392, 'Nellisa', 'Female', 55, Decimal('6.5'), datetime.date(2022, 4, 15)), {'User_id': 0, 'Name': 1, 'Gender': 2, 'Age': 3, 'Total_hours_of_sleep': 4, 'date': 5})

Row((8098, 'Kedrick', 'Male', 45, Decimal('5'), datetime.date(2022, 3, 9)), {'User_id': 0, 'Name': 1, 'Gender': 2, 'Age': 3, 'Total_hours_of_sleep': 4, 'date': 5})BigQuery Delete rows using Python

The below code deletes a row from BigQuery table users_sleep_tracker using Python. Similarly we can run the Insert or Update query in BigQuery using Python.

Please note that the service account should have IAM role “BigQuery Data Editor” to make change in the table.

from google.cloud import bigquery

from google.oauth2 import service_account

credentials = service_account. \

Credentials. \

from_service_account_file('/Users/rc_user_1/GCP/my-rcs-project-833123-ef45632b1b12.json')

project_id = 'my-rcs-project'

client = bigquery.Client(credentials=credentials, project=project_id)

query_job = client.query("""

delete from rc_fin_test_tables.users_sleep_tracker

where User_id = 7431;

""")

results = query_job.result() # Waits for job to complete.

print("Total rows affected: ", query_job.num_dml_affected_rows)Output

Total rows affected: 1

Recommended Articles

- How to execute a Select query on BigQuery using Java?

- How to schedule and run BigQuery using Cloud Composer?

- Delete and Truncate statement in BigQuery

References from GCP official documentation

Your Suggestions