How to load JSON data from Cloud storage to BigQuery?

Contents

Load JSON data from Cloud storage to BigQuery

The JSON file can be loaded from Cloud Storage to a new table/partition or append/overwrite an existing BigQuery table. While loading the JSON data, we need to keep in mind few limitations which are listed in GCP documentation. Any of the following method can be used to perform this load operation.

- The Google Cloud console

- The bq command-line tool’s bq load command

- The jobs.insert API method and configuring a load job

- The client libraries

- LOAD DATA DDL statement

In this tutorial, we are going to use LOAD DATA DDL statement.

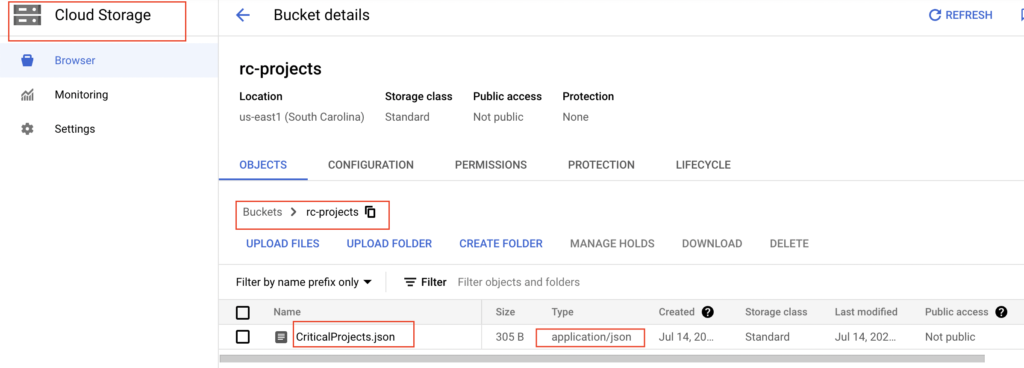

Step 1: Find the location of JSON file in Google Cloud Storage

We have a JSON file with the name of CriticalProjects.json in GCS bucket rc-projects. It consist of two json records as below.

As per the GCP documentation, we have added each JSON object on a separate line in that file.

|

1 2 |

{"employee_id" : 3490,"employee_name":"Stephin","org":"Compliance","projects":[{"name":"Geo-Aus","completed":false},{"name":"Domestic-Regulation","completed":true}]} {"employee_id" : 7621,"employee_name":"Natalia","org":"Platform","projects":[{"name":"Customer360","completed":false},{"name":"Enterprise Operation","completed":true}]} |

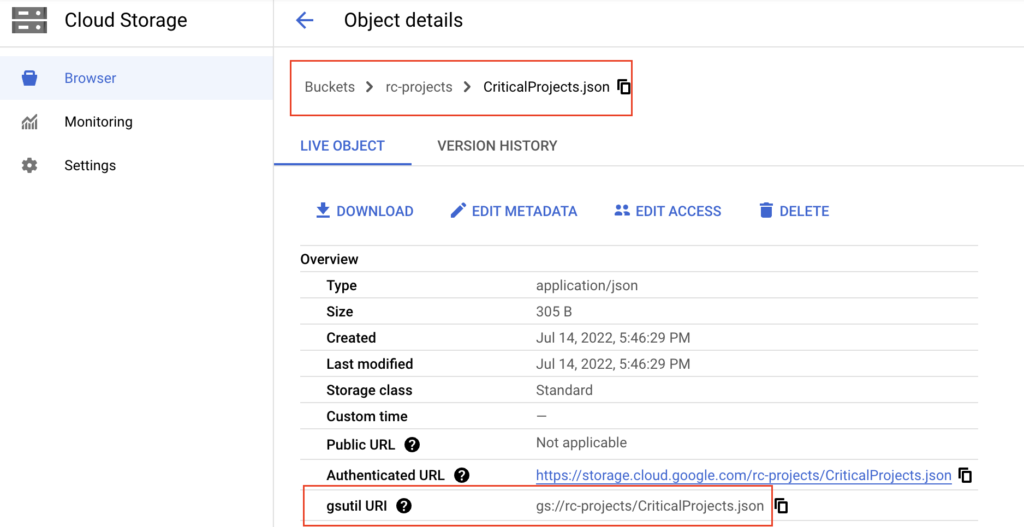

We need to find the path of this file in GCS. If we click our json file in Google Cloud Console, we can get the path of this resource as below.

Step 2 : Define BigQuery table schema as per JSON data

Next we need to define the schema of the table as per our JSON data. The schema contains the name of the column, data type and mode of the column. The following modes can be defined in the schema

- Nullable – It allow NULL values in the column (default).

- Required – NULL values are not allowed.

- Repeated – Column contains an array of values of the specified type.

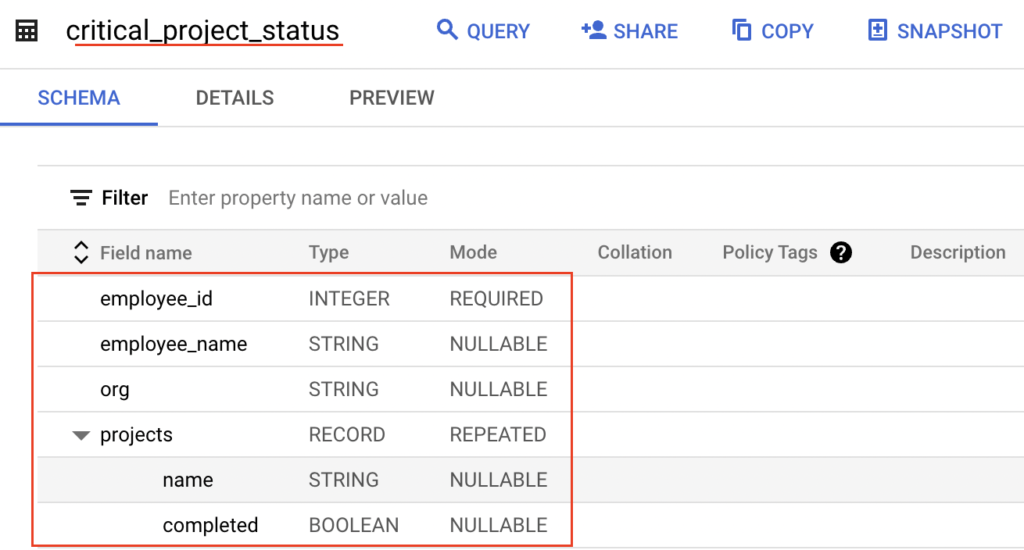

For our JSON data, we have prepared the below schema. Since the field projects has array of values in the JSON file, we have mentioned mode as REPEATED and type as RECORD. Also for the repeated JSON data, we have add the fields details.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

[ { "mode": "REQUIRED", "name": "employee_id", "type": "INTEGER" }, { "mode": "NULLABLE", "name": "employee_name", "type": "STRING" }, { "mode": "NULLABLE", "name": "org", "type": "STRING" }, { "mode": "REPEATED", "name": "projects", "type": "RECORD", "fields": [{"name": "name", "type": "STRING"}, {"name": "completed", "type": "BOOL"}] } ] |

BigQuery has schema auto deduction feature by default. If we want, we can skip this schema preparation step and proceed to run the LOAD DATA DDL statement.

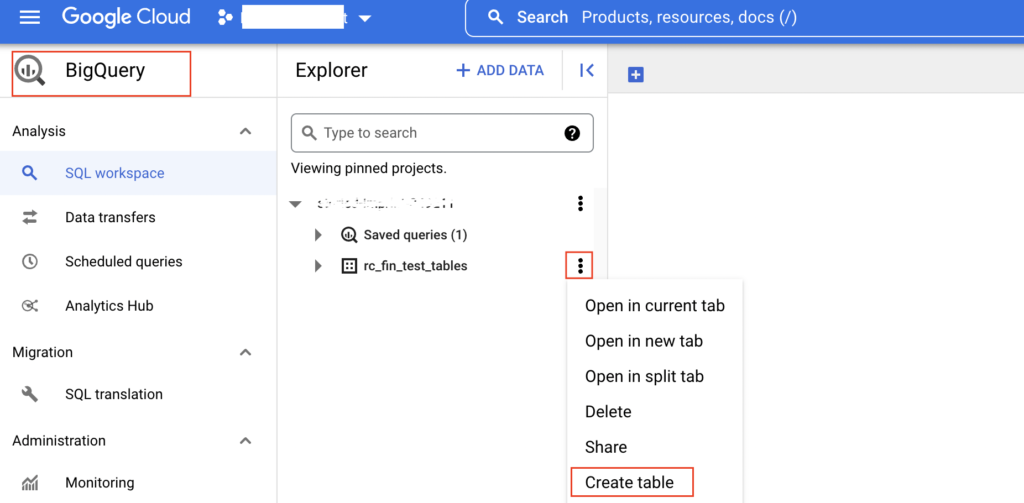

Step 3 : Create a BigQuery table

Let’s create the BigQuery table using the schema that we prepared. As we shown below, we need to click the three dots near to our dataset rc_fin_test_tables and select Create table option.

It will open a pop up page where we need to specify the table name and schema. Here the dataset name is rc_fin_test_tables and table name is critical_project_status. As shown below, we need to provide the schema after enabling Edit as text option.

Once we click the CREATE TABLE button, it created the BigQuery table based on the given schema.

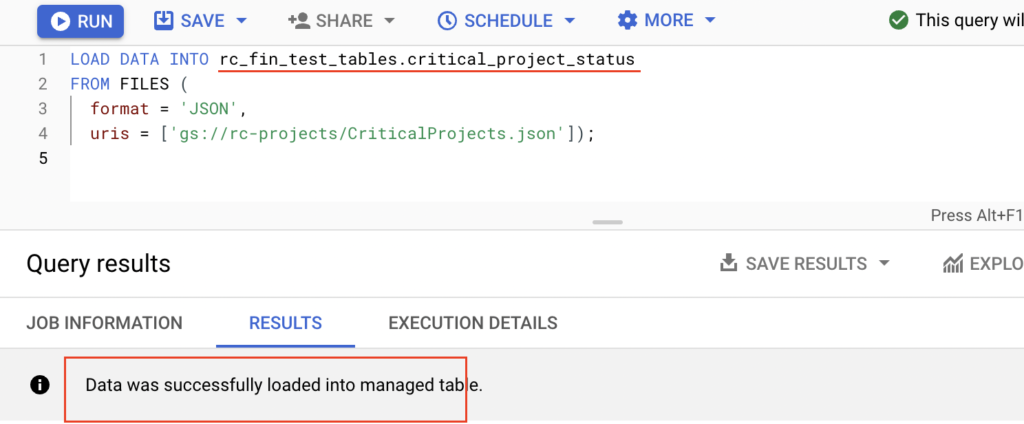

Step 4: Run Load Data DDL statement

Now we can run the LOAD DATA statement to load the JSON values into BigQuery table. The load options are mentioned to define the format and location of the source file.

- Source file format is JSON

- Source data location in GCS = gs://rc-projects/CriticalProjects.json

- Target BigQuery dataset is rc_fin_test_tables

- Target BigQuery table is critical_project_status

|

1 2 3 4 |

LOAD DATA INTO rc_fin_test_tables.critical_project_status FROM FILES ( format = 'JSON', uris = ['gs://rc-projects/CriticalProjects.json']); |

The Load data statement is executed successfully as below.

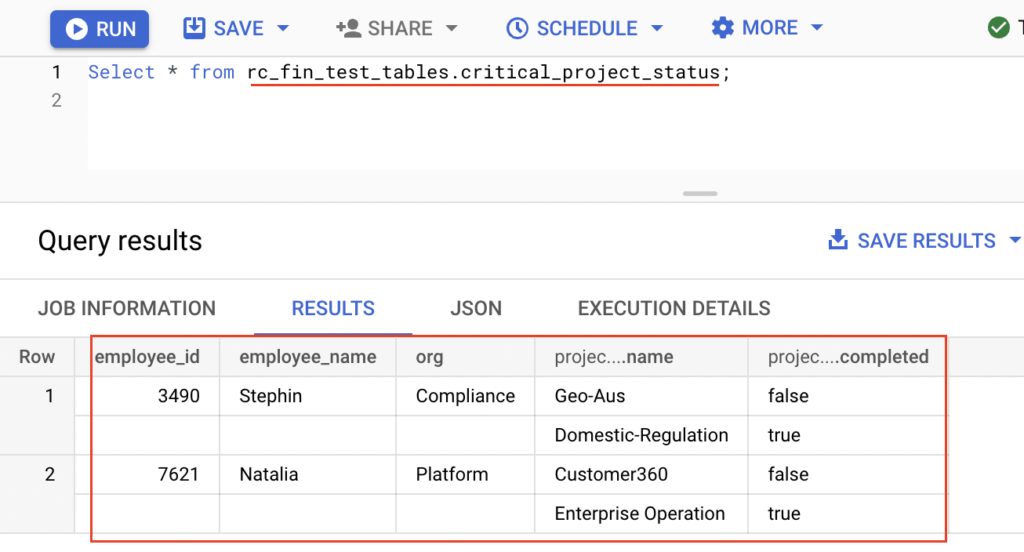

Let’s verify the data in BigQuery table critical_project_status. As expected, the given two JSON records are inserted into the table. The column projects has nested fields as name and completed.

Finally the JSON data is loaded from Google Cloud Storage gs://rc-projects/CriticalProjects.json to BigQuery table critical_project_status.

Recommended Articles

- Create table as Select, Create table Copy and Create table Like in BigQuery

- How to create an external table in BigQuery?

- How to create a view in BigQuery?