How to create a external table in Hive?

Contents

External Table in Hive

When we create the table in Hive, we can define the type of a table. As per the Hive 1.2 version, It has two types of table as below.

Internal (Managed) Table :It is a default table type in Hive. It means that if we not define the table type in the Create table statement, it automatically create it as Internal Table. It is a Hive owned tables. So Hive itself manage the metadata and actual data of the table. Hive stores the actual data of the internal table in the metastore. Internal table is also called as Managed table.

External Table : Hive metastore store only the schema(metadata) of the table. Hive doesn’t manage the data of the external table. Since the data of external table is stored outside the Hive metastore, the DROP table statement remove only the metadata of the table. The original data of the table is still maintained in the given HDFS location.

In this tutorial, we will look at the external table in Hive with examples.

Syntax to Create External Table in Hive

The keyword External is used in the Create table statement to define the External table in Hive. Also we need to give the location as a HDFS path where we want to store the actual data of the table. If we not provide the location, it will store the data in the default hdfs location that is configured in the system.

In addition, we can define the row format of the table like adding the delimiter between the fields. File format is required after the Stored as keyword. Also we can mention the table properties in the Create table Statement.

|

1 2 3 4 5 6 7 |

CREATE EXTERNAL TABLE [IF NOT EXISTS] <Database_Name>.<Table_Name>( Column_1 <data_Type>, Column_2 <data_Type>,....Column_N <data_Type>) [ROW FORMAT row_format] [STORED AS file_format] [LOCATION hdfs_path] [TBLPROPERTIES (property_name=property_value, ...)]; |

External table example in Hive

For this example, we will create a table called courier_details as External table in Hive

|

1 2 3 4 5 6 7 8 9 |

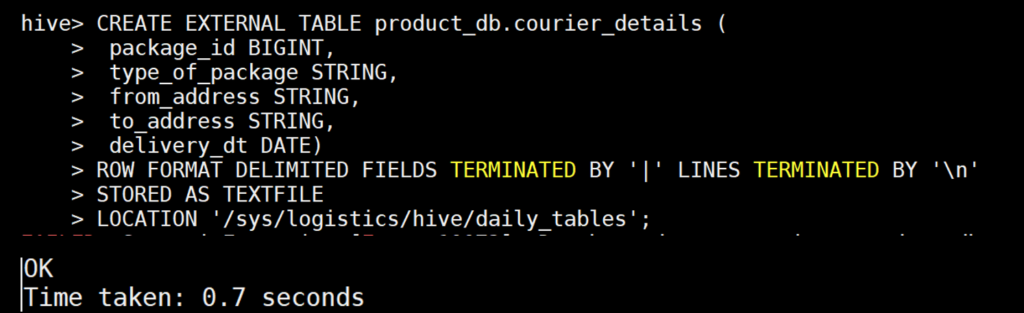

CREATE EXTERNAL TABLE product_db.courier_details ( package_id BIGINT, type_of_package STRING, from_address STRING, to_address STRING, delivery_dt DATE) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' LINES TERMINATED BY '\n' STORED AS TEXTFILE LOCATION '/sys/logistics/hive/daily_tables'; |

As we can see, we have defined the location of the table and other properties in the create table statement.

Drop the external table

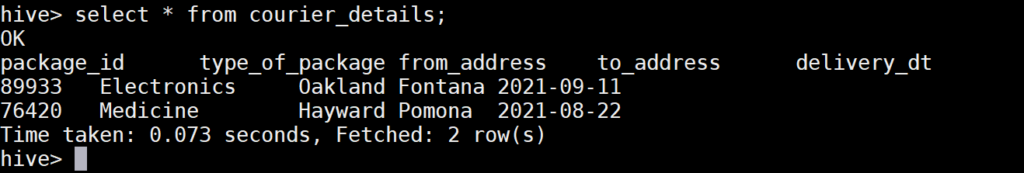

We have inserted few records into the courier_details table. Lets select the rows from that table.

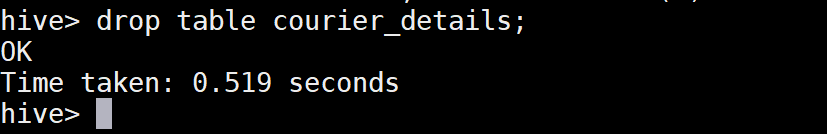

The table data are stored in the given hdfs location /sys/logistics/hive/daily_tables. Even though we drop this external table, we can still find the data in that hdfs location. Lets drop the table and check hdfs file.

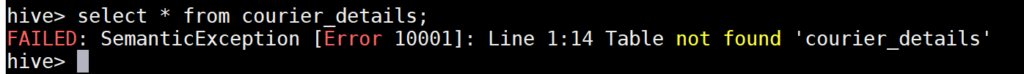

Hive just removed the meta data of the table. So if we try to select the data in Hive, it will show the error message as ” table not found”.

But we can find the data in the HDFS location that is mentioned in the create table statement.

|

1 2 3 4 |

$ hdfs dfs -ls /sys/logistics/hive/daily_tables Found 2 items -rwxrwxrwx 3 test_user_1 hdfs 53 2021-09-12 08:03 /sys/logistics/hive/daily_tables/000000_0.deflate -rwxrwxrwx 3 test_user_1 hdfs 49 2021-09-12 08:03 /sys/logistics/hive/daily_tables/000000_0_copy_1.deflate |

Using hdfs dfs -text command, we can see the data inside the hdfs file.

|

1 2 3 4 |

$ hdfs dfs -text /sys/logistics/hive/daily_tables/000000_0.deflate 89933|Electronics|Oakland|Fontana|2021-09-11 $ hdfs dfs -text /sys/logistics/hive/daily_tables/000000_0_copy_1.deflate 76420|Medicine|Hayward|Pomona|2021-08-22 |

We created the external table in Hive. Then we executed the drop statement to see whether Hive is removing only metadata of the table or not. As we expected, it removed only the meta data of the table. It didn’t remove the actual data of the table in HDFS.

Recommended Articles

- How to create a table with Partitions in Hive?

- How to create hourly partitions in Hive table?

- Copy the data or table structure from one table to another in Hive

- Create a Hive External table on Google Cloud Storage(GCS)