Create a Hive External table on Google Cloud Storage(GCS)

Contents

Google Cloud Storage (GCS)

Google Cloud Storage is a secure and scalable storage option offered by Google. It allows us to store the objects (images, videos, document and so on) in Google Cloud. It store the objects in container which is called as Bucket. The buckets are associated with the project that is created in Google cloud platform. In this tutorial , we will try to create a Hive External table in GCS bucket.

Prerequisites to create a Hive External table in GCS

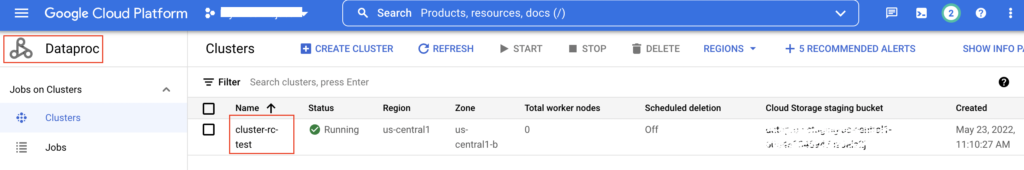

- Dataproc cluster – To perform the Big data processing, GCP providing a service called as Dataproc where many open source tools(Hadoop, Hive, Spark,.etc) are configured. We need to create a cluster with the name in Dataproc. We will be submitting a job to that cluster using gcloud command line tool.

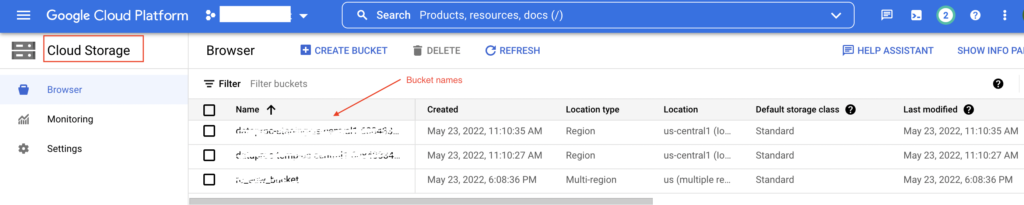

- Bucket in GCS – In the Cloud storage, we need to create a bucket with the name. It will be used as a location in the Hive create table statement. Example : gs://<bucket_name>

gcloud command to submit a Hive job

There are multiple ways to submit a job to Data proc cluster. Please refer Google Cloud documentation for more details.

- Dataproc API jobs submit

- Programmatic request

- Google Cloud CLI gcloud command-line tool

- Cloud Shell

- Google Cloud Console in browser

Google Cloud SDK providing a set of command line tools such as gcloud, gsutil and bq. The Google Cloud CLI (gcloud) is used to create and manage Google Cloud resources. In this example, we will submit a Hive job using gcloud command line tool.

Create a Hive external table using gcloud

Syntax

|

1 2 3 |

gcloud dataproc jobs submit hive --cluster=<cluster_name> \ -e="CREATE EXTERNAL TABLE <database_name>.<table_name>(column_1 <data_type>, <column_2> <data_type>,...) LOCATION 'gs://<bucket_name>';" |

Consider that we already created the Dataproc cluster with the name of cluster-rc-test. Similarly we created the GCS bucket rc_customers_bucket. In GCP Hive, we want to create an external table driver_details in the database cabs_db. Let’s write the CREATE TABLE statement with these values

|

1 2 3 4 5 6 7 8 |

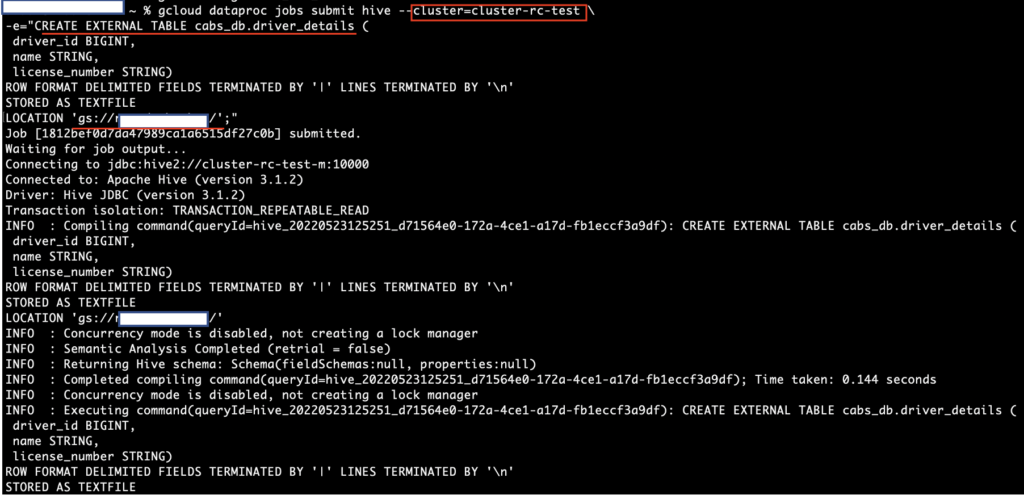

gcloud dataproc jobs submit hive --cluster=cluster-rc-test \ -e="CREATE EXTERNAL TABLE cabs_db.driver_details ( driver_id BIGINT, name STRING, license_number STRING) ROW FORMAT DELIMITED FIELDS TERMINATED BY '|' LINES TERMINATED BY '\n' STORED AS TEXTFILE LOCATION 'gs://rc_customers_bucket/';" |

gcloud command execution

We executed the gcloud command in our local machine where Google Cloud SDK is configured. So the command is executed successfully and created the external table driver_details in Hive. The location of the table is GCS bucket gs://rc_customers_bucket/.

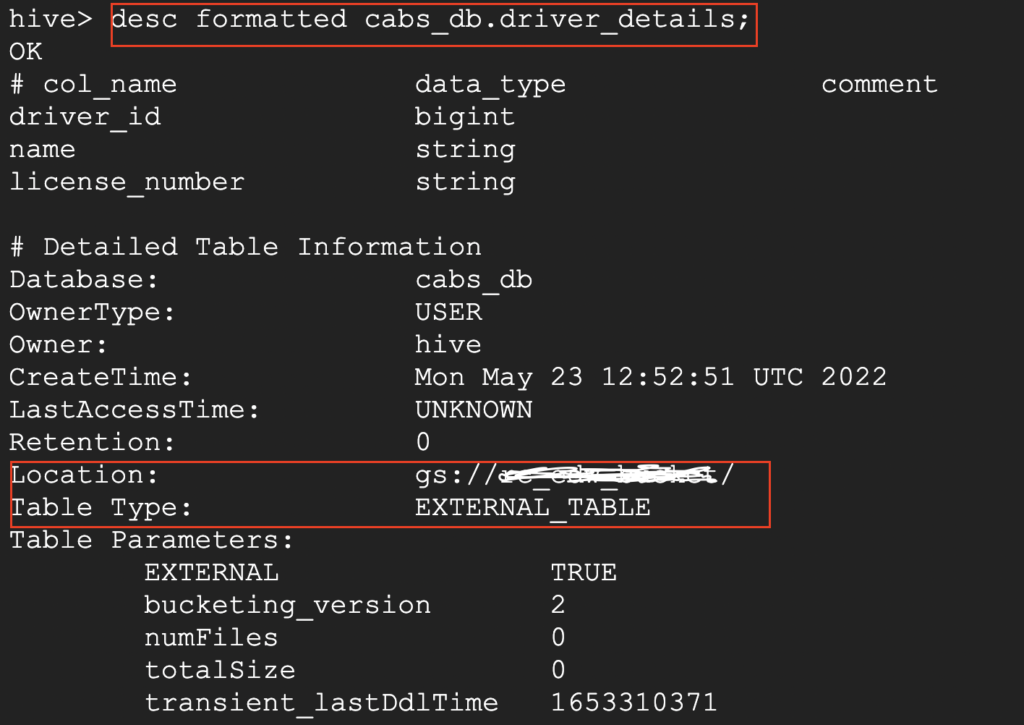

Next we logged into our Dataproc cluster cluster-rc-test using SSH in browser option. We can confirm that the table is created successfully on GCS bucket.

Insert records in Hive table

Let’s try to insert below records into the external table. We are directly running these queries in Dataproc cluster cluster-rc-test.

|

1 2 |

insert into driver_details values(1194,'Alex','A0002144'); insert into driver_details values(8321,'Martin','A0007721'); |

After executing the inserts, we can check those data in the gcs bucket. Here we used gsutil CLI command to check the files in GCS. For each record, separate file is created under the GCS bucket.

|

1 2 3 |

rc_user_1@ls-server ~ % gsutil ls gs://rc_customers_bucket/ gs://rc_customers_bucket/000000_0 gs://rc_customers_bucket/000000_0_copy_1 |

Finally we can see the data inside those files using gsutil cat command.

|

1 2 3 4 5 |

rc_user_1@ls-server ~ % gsutil cat gs://rc_customers_bucket/000000_0 1194|Alex|A0002144 rc_user_1@ls-server ~ % gsutil cat gs://rc_customers_bucket/000000_0_copy_1 8321|Martin|A0007721 |

Recommended Articles

- How to create a external table in Hive?

- Create table as Select, Create table Copy and Create table Like in BigQuery

- How to create an external table in BigQuery?

- How to access a Hive table using Pyspark?

References from GCP official documentation

- Submit a job in Dataproc cluster

- gsutil command

- install the gcloud CLI

- gcloud dataproc jobs submit hive