How to export data from BigQuery table to a file in Cloud Storage?

Contents

Export data to a file in BigQuery

After loading the data into BigQuery table, we may need that data in other environment. For that, we can use the Export data option in BigQuery. It export or extract the data from BigQuery to Cloud storage.

BigQuery providing different options to export the data.

- Using the Cloud console

- Using the bq extract command in the bq command-line tool

- Submitting an extract job via the API or client libraries

- Using EXPORT DATA statement

Also it supports different data formats (CSV, JSON, Avro & Parquet) and compression types for exported data. In this tutorial, we will export the BigQuery data using EXPORT DATA statement.

EXPORT DATA statement

The EXPORT DATA statement exports the results of a query to an external storage location. The storage location must be Cloud storage.

Syntax of EXPORT DATA statement

|

1 2 3 4 |

EXPORT DATA [WITH CONNECTION connection_name] OPTIONS (export_option_list) AS query_statement |

- connection_name – It is an optional argument. It defines a connection resource that has credentials for accessing the external data. It specify the connection name in the form PROJECT_ID.LOCATION.CONNECTION_ID.

- export_option_list – It defines the list of options for the export operation. The options includes URI of the destination, file format , field delimiter and so on. Please refer the export options list here.

- query_statement – It is a SELECT query. The result of the query is exported to the external destination.

Example

In this example, We are going to export the BigQuery table in CSV format. We have a table Product_reviews in BigQuery which contains the data as below.

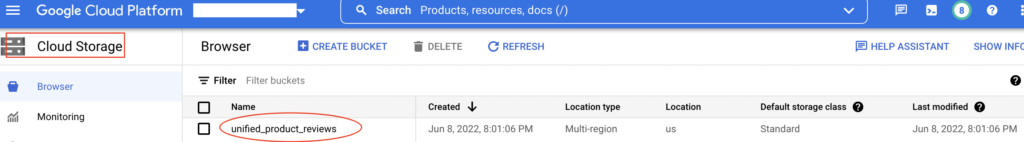

To place the extracted file in the cloud storage, we have created the bucket unified_product_reviews in Cloud storage.

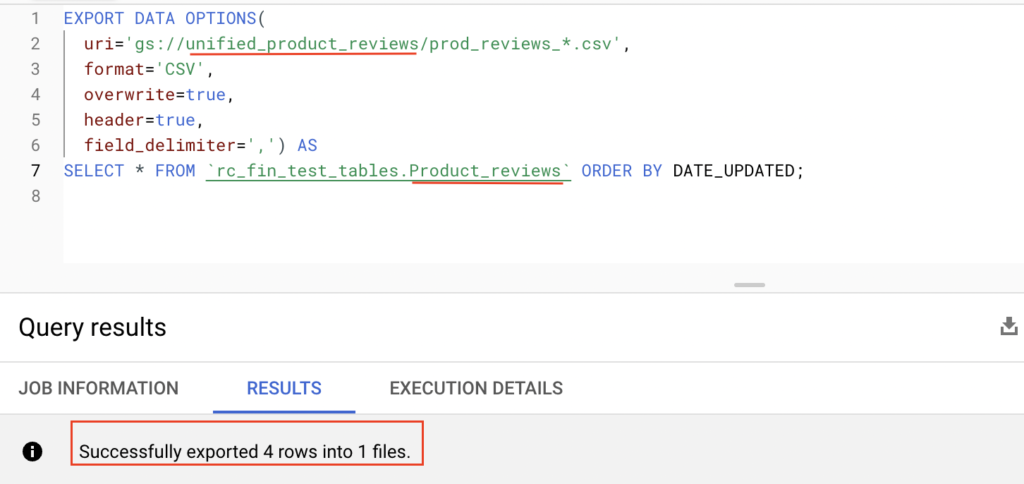

Now we can write the EXPORT DATA statement with these details.

|

1 2 3 4 5 6 7 8 |

EXPORT DATA OPTIONS( uri='gs://unified_product_reviews/prod_reviews_*.csv', format='CSV', overwrite=true, header=true, field_delimiter=',') AS SELECT * FROM `rc_fin_test_tables.Product_reviews` ORDER BY DATE_UPDATED; |

Export options in BigQuery

In the options, uri defines the location of the GCS bucket. If the exported file size is larger than 1 GB, it will create multiple files. So we have used the wildcard operator(*) in the file name prod_reviews_*.csv. It will replace the wildcard operator with number(starting 0). Similarly, other options defines the property of the file.

- format=’CSV’ – The exported file will be in CSV format.

- overwrite=true – It will overwrite the data if the file already exist in the destination location.

- header=true – The column names will be included as header in the exported file.

- field_delimiter=’,’ – The delimiter character comma(,) is used to split the column values.

Exported data in cloud storage

Let’s run the Export data statement and verify the result in the cloud storage.

From the table Product_reviews, all the rows are exported using EXPORT DATA statement. Also it placed the exported file in the Cloud storage. The file name is prod_reviews_000000000000.csv. It is created inside the GCS bucket unified_product_reviews.

gsutil command to verify the data

We can verify the exported data using download option in cloud console or gsutil command. Let’s verify the data using gsutil command in the local machine. It works if the cloud sdk is configured in that machine.

|

1 2 |

rc_user_1@ls-server ~ % gsutil ls gs://unified_product_reviews/ gs://unified_product_reviews/prod_reviews_000000000000.csv |

We could see the exported file using the gsutil ls command. Now we can verify the data using gsutil cat command.

|

1 2 3 4 5 6 |

rc_user_1@ls-server ~ % gsutil cat gs://unified_product_reviews/prod_reviews_000000000000.csv Product_id,User_id,review_info,Date_updated 98003,Kilian,"Acceptable quality, good fit.",2022-05-16 36382,Henrik,The fragrance was good,2022-05-28 56887,Alyssa,Way too strong and greasy!,2022-06-05 56901,rozzy,This stuff is amazing,2022-06-07 |

As we can see above, the data is exported successfully from the BigQuery table Product_reviews to Cloud storage bucket.

Recommended Articles

References from GCP official documentation